Towards safe and reliable autonomous driving: IBV’s progress within the Medusa Project

17 September 2025.

Author/es: Luis I. Sánchez Palop, Begoña Mateo Martínez, José Solaz Sanahuja, Elisa Signes i Pérez

Instituto de Biomecánica (IBV)

The MEDUSA network, led by the Instituto de Biomecánica (IBV), came to an end on the 30th of June 2025. Three other Spanish technology centres collaborated with the IBV on the project, which was part of the CDTI-funded Cervera Programme for the Generation of Networks of Excellence: CTIC (Asturias), IKERLAN (Basque Country) and TECNALIA (Basque Country).

Throughout the project, the members of the Network worked together to develop various technologies related to all aspects of Connected Cooperative Automated Mobility (CCAM), such as sensorisation to understand what is happening inside the vehicle (i.e. passengers) and outside (i.e. other road users and infrastructure), as well as vehicle-to-vehicle and vehicle-to-infrastructure communication and the processing of the data thus generated.

What set the MEDUSA Network apart from similar projects was its focus on placing the human factor and users at the heart of the entire innovation process. This included developing technologies to monitor the state of vehicle occupants and ensuring the appropriate and ethical use of the generated information.

In the case of the IBV, it has enabled us to make significant progress in four of our primary areas of endeavour: enhancing our HAV (Human Autonomous Vehicle) simulator, which is designed to better integrate drivers and passengers into CCAM design; optimising user characterisation and the emotional and cognitive state of occupants inside the vehicle, developing a virtual reality passenger laboratory as part of our Immersive Mobility Environment to analyse passenger behaviour; and developing interior design methodologies that take into account the new activities people will be able to perform in autonomous vehicles.

The progress made by the IBV and by CTIC, IKERLAN and TECNALIA will contribute to a new mobility that incorporates the human factor as a core element of its design, thereby ensuring that it makes a positive impact on society as a whole, including users and other people who interact with it.

INTRODUCTION

As part of the MEDUSA Network, the IBV focused its work on identifying, compiling and developing Human Factor techniques and tools applied to autonomous vehicles in order to place people at the centre of the development of the new CCAM autonomous mobility.

For example, we conducted an extensive analysis of participants’ experiences in controlled laboratory environments, including the HAV driving simulator and virtual reality scenarios. This allowed us to identify a series of methodologies that will enable us to move towards the development of an empathic autonomous vehicle capable of adapting to its occupants and optimising their user experience.

More specifically, we worked on the following areas of research:

- Improving the HAV user integration simulation environment for both drivers and passengers in terms of the design of all levels of autonomous driving.

- Developing passenger-focused cabin design methodologies to create a more empathic vehicle that takes into account people’s different needs and the activities performed in the vehicle.

- Developing the passenger laboratory within the Virtual Reality Immersive Mobility Environment in order to integrate pedestrians into the behaviour of autonomous vehicles, thereby making it possible to enhance the vehicle’s empathy towards other road users.

- Improving the IBV’s VitalWise physiological signs recording system and the algorithms used to characterise cognitive and emotional states for use inside the vehicle, in the real world.

The following is a summary of the results obtained by the IBV from the start of the project in November 2023 to its conclusion in June 2025.

THE HUMAN AUTONOMOUS VEHICLE (HAV): A SIMULATOR FOR INCORPORATING PEOPLE INTO THE DESIGN OF THE NEW MOBILITY

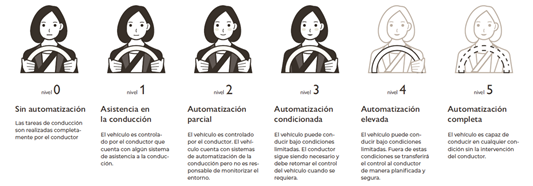

The first area of innovation that the MEDUSA Network embarked on was improving the immersive capacity of the Human Autonomous Vehicle (HAV), a driving simulator developed by the IBV. The HAV enables us to emulate the behaviour of road vehicles with any degree of automation, ranging from traditional vehicles (referred to as level L1) where the person at the wheel has full control, to completely automated vehicles (L5).

Figure 1. Driving automation levels

The simulator’s high level of realism is achieved through the use of a dynamic platform that transfers the vehicle’s accelerations to the user, three 55” screens that present the simulated environment in an immersive and enveloping way, and a driving environment (steering wheel, pedals, seat etc.) to control the vehicle. Together, these features allow the user to immerse him or herself in the driving simulation, which is facilitated by an open-source software called CARLA. This software both designs and reproduces the scenarios through which the vehicle moves.

The HAV is an extremely useful tool for developing and validating various technologies, including ADAS (driving aids) and HMI (human-machine interfaces) for displaying information, as well as the behaviour of the autonomous vehicle itself (driving style, the way information is provided to users, and warnings to take over control, etc.).

During the course of this initiative, the IBV improved two key aspects of the immersion provided by the simulator: the realistic nature of the driving seat and the dynamics of the force platform used to simulate movement.

With regard to the former, we worked hard to design a driving environment similar to a cockpit or car interior by using more realistic, built-in HMI screens. As a result, we transitioned from a scenario where, although the various elements (steering wheel, seat, etc.) faithfully simulated the sensation of driving, users perceived they were in a gaming environment rather than a real vehicle, to an interior that is more recognisable to drivers.

The new design features a dashboard that incorporates steering wheel control components on one side and user information screens on the instrument panel at the front and the console on the side. This enables interaction with the user through the flexible programming of various visual and actionable events. We also added an armrest containing other user control components, as found in real vehicles, as well as an emergency push button to halt the simulation in case of danger. Finally, we added a platform and side panels to secure the entire structure to the simulator’s dynamic platform, providing greater stability for all the components.

Figure 2. New design of the HAV simulation environment.

With regard to the improved dynamic model, we started with the NVIDIA PhysX vehicle model included in the CARLA simulation software. However, tests carried out by the IBV in previous projects detected problems such as a lack of realism and motion sickness. The fact is that CARLA was not intended for simulations involving people at the wheel. As it is essential to integrate the model – the movement perceived by the person using the simulator must be adjusted to match their subjective perception of the dynamics offered by the simulation – we optimised the balance between precision in dynamics and execution times. This balance is key to reducing motion sickness by synchronising the visual and vestibular information as well as the realism of the movement of the simulated vehicle.

To improve the dynamic model responsible for the calculations used to obtain the vehicle’s accelerations, we embarked on an external collaboration with the Department of Advanced Vehicle Dynamics and Mechatronic Systems (VEDYMEC) at the Carlos III University.

We compared the current dynamic model offered by CARLA against other dynamic vehicle models. Taking into consideration the shortcomings we had identified, we decided to use a dynamic model that prioritises parameters that are essential for providing users with a realistic simulation. Thanks to this study, we have developed and integrated a new dynamic model into the HAV simulator.

INMERSIVE MOBILITY ENVIRONMENT: OUR PEDESTRIAN LABORATORY

On the other hand, when developing a person-centred autonomous vehicle, one needs to take not only the occupants into consideration, but also other roles related to the autonomous driving environment. Our streets and roads are used not only by motor vehicles, but also by pedestrians and people riding bikes and scooters etc.

It is therefore vital to understand how these other users of the road infrastructure behave – bearing in mind that they are also the most vulnerable users – both in order to improve the realism of the simulations carried out in the HAV and to model the behaviour of the algorithms controlling autonomous vehicles. Consequently, to improve their real-time detection and response capacity, autonomous driving algorithms must be trained using accurate pedestrian movement data. Companies must also prove that their systems can operate safely in urban environments where passengers are present.

Analysing pedestrian movement patterns helps to anticipate their actions, generate synthetic data for training purposes, and improve the vehicle’s decision-making capacity. However, it is a fact that not all pedestrians walk in the same way; their behaviour can vary depending on the individual, their age, and other factors. They run, stand still, use mobile devices and sometimes cross the road where they shouldn’t. An autonomous system must recognise these variations and be able to react appropriately. This is why realistic pedestrian data is essential.

Carrying out tests involving these road users is extremely useful for gaining an understanding of how pedestrians or cyclists react to certain traffic situations, vehicle behaviour, street layouts or traffic signs. However, conducting such tests in a real-world setting can be complicated in terms of participant safety, as well as in terms of the challenges involved in testing different traffic configurations, signage, lighting and weather conditions, etc.

This is why it is essential to have a tool that can simulate how pedestrians and cyclists behave under safe and controlled conditions. The HAV driving simulator overcomes the constraints associated with simulating vehicle behaviour. To address the need for pedestrian characterisation and modelling, the IBV has developed a pedestrian laboratory within its so-called Immersive Mobility Environment. In the future, this will be extended to include other road users such as cyclists and scooter riders.

The MEDUSA Network has created an immersive laboratory which uses virtual reality goggles to focus on pedestrian behaviour and more specifically on vehicle-to-pedestrian and pedestrian-to-infrastructure interaction, in order to incorporate their behaviour into the study.

In order to determine what was required in the laboratory, we analysed the pedestrian variables we needed to gather. We analysed the main factors influencing their behaviour, as well as the variants within the physical infrastructure (zebra crossings, traffic signs and lights, the presence of bollards, etc.), in order to design the characteristics and elements found in the different virtual reality scenarios we recreated in the laboratory. Our aim was to ensure that the key interactions between vehicles, infrastructure and pedestrians were accurately displayed in the immersive scenarios experienced by those wearing the virtual reality goggles.

Figure 3. Simulation environment developed for the Pedestrian Laboratory.

The laboratory also has a contactless movement capture system called Captury, which has a series of specific modules for analysing hand movements. We used Meta Quest Pro reality glasses to represent the immersive environment because they provide access to eye-tracking data. This allows us to synchronise the subject’s movement log with what they are looking at and what is happening in the simulation. This is a key feature, as knowing in what direction the subject is looking is an essential factor in understanding a passenger’s intentions when they are interacting with vehicles.

To ensure flexibility when simulating urban scenarios, the system can personalise different aspects of the environment and adapt to different experimental conditions. These options include street signs, the time of day and the visibility afforded by the elements found in the street (trees, parked cars, bins, etc.). We are also able to simulate possible technological distractions, such as the use of smartphones or headphones. This allows us to analyse how these factors affect an individual’s attention and perception of their surroundings, and consequently their behaviour.

NEW DESIGN REQUIREMENTS FOR NEW USES OF TH VEHICLE INTERIOR

New tasks unrelated to driving

The advent of autonomous vehicles has given rise to what are known as Non-Driving-Related Tasks (NDRT), that is, anything the driver can do when their involvement is minimal or non-existent, such as reading, sleeping, working or watching a film.

Vehicle automation will improve passengers’ travel experience, allowing them to use their time in a more comfortable and efficient way. This change will also lead to a new philosophy in the design of vehicle interiors, and we may see a trend towards vehicles being turned into “living spaces” in which passengers can perform all sorts of activities on the go, such as working, playing games, socialising or just resting.

This transformation will lead to a wide range of new on-board experiences and changes, including most notably, configurable and versatile interiors and a new environmental experience in which we will be able to automatically adjust the vehicle’s lighting, sound and climate control systems to suit the situation and the passengers’ individual preferences, creating personalised atmospheres for every journey.

The importance of body posture in the design of autonomous vehicles

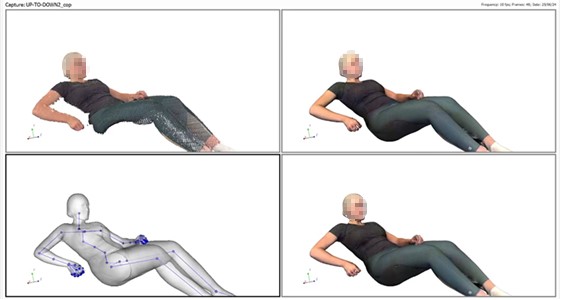

When designing the ergonomics of vehicle interiors, it is important to use avatars or mannequins that accurately simulate the postures and movement of autonomous vehicle users. The use of such avatars is essential when designing the seats and interiors of these new vehicles, not to mention their passenger restraint and safety systems, where posture is a key design parameter.

Figure 4. Example of the creation of a full-body mannequin from the body surface left when the seat is removed.

The IBV is making progress in developing these avatars thanks to its highly accurate body-scan technology, which works even when the subject is moving. Nevertheless, this technology we have at our disposal is designed to scan the visible body. If it is concealed, for example, at the point of contact with the seat, complications arise that make it difficult to create a 3D reconstruction of the body.

To overcome this problem, we have been working on a new methodology that can recreate the full 3D form of a moving body, even when parts of it are hidden by the seat. This new methodology is based on the IBV’s Move4D technology. Thanks to this methodology, the grid representing the scanned person’s body is adjusted to render both their pose and the deformation of the soft tissues in contact with the seat[1].

More sensitive interiors

The sounds, smells and colours perceived by our senses have an impact on our emotional state and can be used in vehicle interiors to influence the passengers’ mood.

In this regard, we have compiled the existing knowledge on the sensory aspects of the cabin, mainly those related to olfactory and musical stimuli and lighting.

Based on this and previous existing knowledge, the IBV has developed a methodology for generating and inducing emotional moods resulting from different stimuli, such as lighting, sounds and smells, creating standard environments in terms of their relevance to autonomous driving. A few examples include an alert mode that detects when the driver is drowsy or asleep and needs to be woken up urgently to take control of the vehicle; an activation mode for work, and a relaxation mode when the vehicle enters autonomous driving mode.

The combination of the analysis of the possible postures that people may adopt inside the vehicle, depending on the activity they want to perform and on the influence of the atmosphere on their emotions, is a starting point for the design of the interiors of the autonomous vehicles of the future.

VITALWISE: A TOOL FOR MONITORING THE STATE OF VEHICLE OCCUPANTS

Cognitive states have a considerable impact on traditional vehicles and automated cars in levels L1 and L2. States such as drowsiness and fatigue, whether active or passive, mental stress and a heavy mental burden significantly increase the risk of accidents. In autonomous vehicles, the greater the level of automation, the lesser the impact of these cognitive states, given that the driver rarely has to intervene and can leave control of the vehicle to the vehicle itself. However, at intermediate levels of automation, such as L3, where the driver has to supervise the vehicle and be prepared to intervene and take control, the effect of these cognitive states is still highly relevant.

Conversely, the higher the level of automation, such as L4 or L5, the more relevant the emotional state of a person on board a vehicle becomes. Apart from its functional and utilitarian aspects, the act of driving triggers a series of emotions in a driver. Compared to conventional driving, the emotions experienced by autonomous vehicle drivers are clearly different.

In manual driving, the study of emotions focuses on their relationship with safety and performance. However, at advanced levels of automation and autonomous driving, where people are primarily passengers, their emotions may differ significantly from those experienced during manual driving. Therefore, if we are to design methodologies that go beyond focusing on safety and functionality, we must study the situations and factors that may impact and influence the emotional experience of vehicle occupants.

Of course, measuring a person’s cognitive and emotional state requires accurate measurement of their physiological signs and facial expressions. Furthermore, such measurements must be taken in a non-intrusive way that does not interfere with the subject, avoiding at all costs the need for uncomfortable recording equipment.

In this regard, the IBV’s pioneering work has centred on developing algorithms for monitoring systems that can provide a physiological characterisation of the subject using cameras inside the vehicle that can operate under different lighting conditions and while the vehicle is moving.

In previous projects, the IBV has developed models that use RGB cameras to capture visual information within the visible spectrum and combine it with physiological signs in order to evaluate and analyse facial expressions and emotional states. These models have proven effective under good lighting conditions, such as broad daylight. However, at night or in environments with poor visibility or changing conditions, the limitations inherent in RGB cameras reduce the quality of the images, which has a negative impact on the accuracy of the measurements.

Figure 5. VitalWise detects facial gestures and physiological signs.

To address the challenges posed by these low-light situations, we have worked with Near-Infrared (NIR) cameras as a viable alternative to the previous RGB cameras. NIR technology makes it possible to take highly detailed images at night, thus providing an opportunity to adapt the interpretation models to the state of the driver and passenger.

The MEDUSA Network has therefore worked hard to improve the processing of the physiological signs required to monitor the state of a subject, and to explore the detection of facial expression information in NIR images. Tests have been conducted using a real vehicle in the city of Valencia, making good use of a sandbox[2] provided by the local City Hall. To analyse the quality of the physiological signal detection and improve VitalWise’s algorithms, these tests have involved people of different physiognomies and skin colours.

Figure 6. Tests carroed out, within the framework of the Medusa Network, with a real vehicle inside the Valencia City Council Sandbox.

CONCLUSIONS

In the coming years, developers of the new autonomous mobility are going to have to overcome numerous challenges. These range from how these vehicles behave and how they adapt to their occupants and their emotions, to how they can be integrated into the public highway and their relationship with other road users, such as cyclists or pedestrians. They must also ensure that their interior designs evolve to meet new passenger requirements.

Any progress in these developments requires accurate information to enable the characterisation of people’s needs and behaviour inside and outside the vehicle.

However, studying these aspects in a real-world scenario is not always an easy task; sometimes it may not even be possible. Due to their exceptional nature and the danger involved, certain situations cannot be recreated in a real-world context, making it necessary to study them using immersive simulation scenarios.

Consequently, the IBV has worked within the MEDUSA Network to develop and improve tools and methodologies that make it possible to advance in the design of autonomous mobility, with the ultimate aim of transferring them to industry and society as a whole. These range from highly realistic and immersive simulation environments such as HAV and our pedestrian laboratory within the Immersive Mobility Environment, to tools for determining a person’s state, such as VitalWise, as well as the impact of posture on vehicle interior design requirements, and its influence on passenger satisfaction and comfort.

Thanks to all these areas of innovation that we have worked on within the Medusa Network over the past eighteen months, the IBV is now ideally positioned to contribute to the development of a person-centred Connected and Cooperative Automated Mobility (CCAM). This new emphatic mobility respects drivers and other road users and maximises the satisfaction of society as a whole.

Project (CER-20231011) recognised as a CERVERA Network of Excellence, funded by the Ministry of Science, Innovation and Universities through the Centre for Technological Development and Innovation E.P.E. (CDTI), under the European Union’s Recovery and Resilience Facility (MRR).

ACKNOWLEDGEMENTS

We would like to thank the members of the Medusa Network, the technology centres CTIC, IKERLAN and TECNALIA, and the Valencia City Council for collaborating with the project and making the city’s Sandbox available to the IBV.

BIBLIOGRAFÍA

[1] Valero, J. et al. (2023). Bridging the Gap Between Body Scanning and Ergonomic Simulation of Human Body Interaction in Autonomous Car Interiors. In: Scataglini, S., Harih, G., Saeys, W., Truijen, S. (eds) Advances in Digital Human Modeling DHM 2023. Lecture Notes in Networks and Systems, vol 744. Springer, Cham. https://doi.org/10.1007/978-3-031-37848-5_4

[2] https://www.valencia.es/web/sandbox

BERTHA project – Behavioural ReplicaTion of Human drivers for CCAM (https://berthaproject.eu/)

CCAM States Representatives Group (https://www.ccam.eu/what-is-ccam/governance/ccam-states-representatives-group/)

Connected Cooperative Automated Mobility Partnership (2022) CCAM Strategic Research and Innovation Agenda (2022)

DIAMOND project – Revealing actionable knowledge from data (https://diamond-project.eu)

European Commission (2013). Options for strengthening responsible research and innovation

Niemelä, M., Ikonen, V., Leikas, J., Kantola, K., Kulju, M., Tammela, A., & Ylikauppila, M. (2014). Human-driven design: a human-driven approach to the design of technology. In ICT and Society: 11th IFIP TC 9 International Conference on Human Choice and Computers, HCC11 2014, Turku, Finland, July 30–August 1, 2014. Proceedings 11 (pp. 78-91). Springer Berlin Heidelberg.

SAE J3016™ Recommended Practice: Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles

SuaaVE project – Colouring automated driving with human emotions (https://www.suaave.eu)

Valero, J. et al. (2023). Bridging the Gap Between Body Scanning and Ergonomic Simulation of Human Body Interaction in Autonomous Car Interiors. In: Scataglini, S., Harih, G., Saeys, W., Truijen, S. (eds) Advances in Digital Human Modeling DHM 2023. Lecture Notes in Networks and Systems, vol 744. Springer, Cham. https://doi.org/10.1007/978-3-031-37848-5_4

AUTHORS’ AFFILIATION

Instituto de Biomecánica de Valencia

Universitat Politècnica de València

Edificio 9C. Camino de Vera s/n

(46022) Valencia. Spain

HOW TO CITE THIS ARTICLE

Author/s: Luis I. Sánchez Palop, Begoña Mateo Martínez, José Solaz Sanahuja, Elisa Signes i Pérez (17 of September of 2025). «Towards safe and reliable autonomous driving: IBV’s progress within the Medusa Project». Revista de Biomecánica nº 72. https://www.ibv.org/en/latest-news/towards-safe-and-reliable-autonomous-driving-ibvs-progress-within-the-medusa-project/